AI + Shows = Immersion 2.0: When Performances Feel the Audience

🎬 Introduction

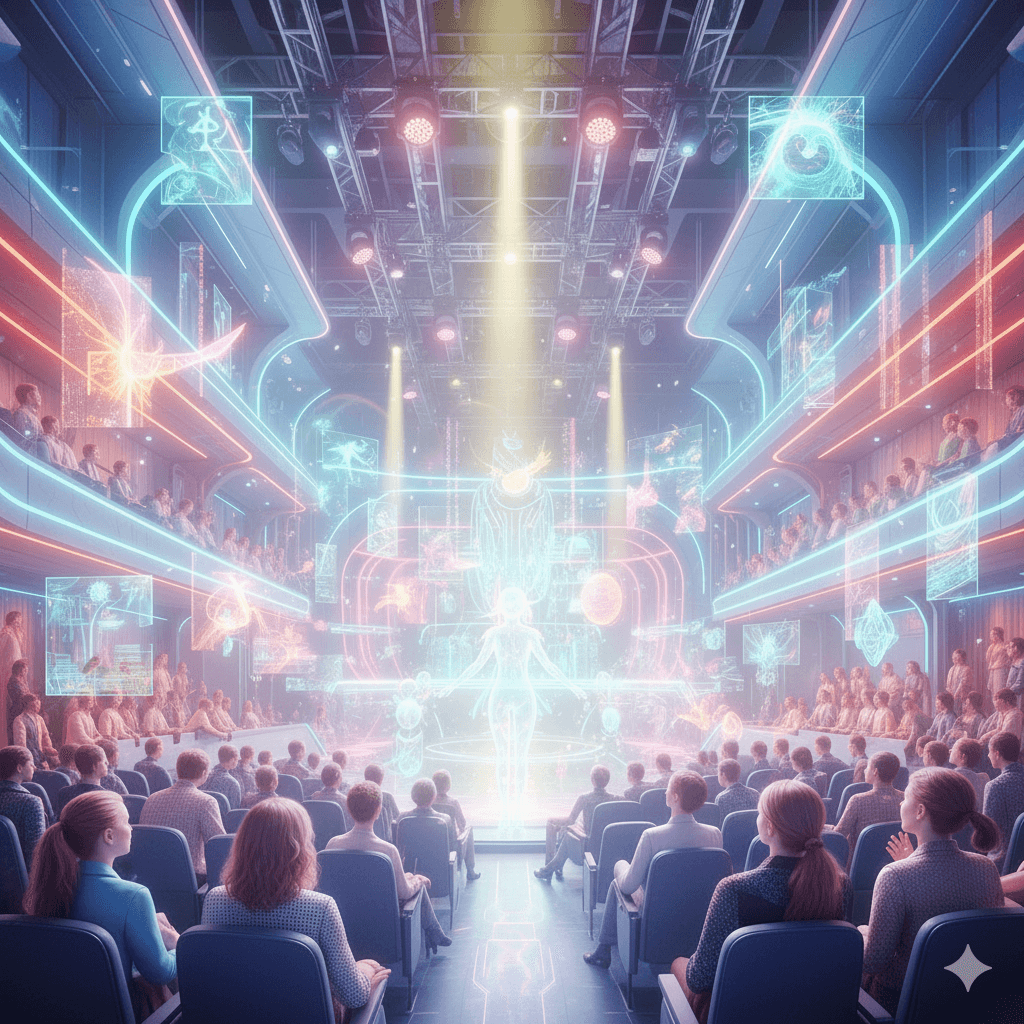

Imagine sitting in a theater. Lights, music, projections surround you.

Suddenly, the light changes in response to your smile.

A hologram turns directly toward you.

That’s not magic — that’s artificial intelligence.

💡 “Shows no longer just perform — they feel the audience.”

Welcome to Immersion 2.0, where technology senses emotions and adapts in real time to each viewer in the room.

📖 Table of Contents

- What “Immersion 2.0” Really Means

- How AI Learns to Feel the Audience

- Global Projects Where AI Reacts to Emotions

- Tech Behind the Curtain

- Emotions as a Script: What’s Next

- What to Expect as Viewers and Artists

- Conclusion

🌀 What “Immersion 2.0” Really Means

Once, immersion meant sound, light, and 3D visuals.

Now, it’s AI interactivity — shows that adapt to audience mood, facial expression, and even voice tone.

The term Immersive 2.0 describes the shift from passive watching to emotional participation.

The show becomes a living organism that senses the collective energy of the crowd.

💬 How AI Learns to Feel the Audience

Modern AI analyzes reactions in real time using multimodal data:

|

Technology |

Function |

Example |

|

Emotion Recognition AI |

Detects micro-expressions and facial cues |

Affectiva, EmotionNet |

|

Voice Sentiment Analysis |

Identifies joy, surprise, boredom by tone |

DeepVoice |

|

Motion Capture + LIDAR |

Tracks gestures and body movement |

Muse, LIDAR stages |

|

Reactive Algorithms |

Dynamically change visuals and sound |

Runway ML, Unreal Engine AI |

🧩 Example:

- When the audience laughs — the stage “blooms” in bright colors.

- When everyone falls silent — the music shifts to a minimalist mood.

🌍 Global Projects Where AI Reacts to Emotions

|

Project |

City |

Unique Feature |

|

The Wizard of Oz at Sphere |

🇺🇸 Las Vegas, USA |

AI adjusts visuals and lighting based on crowd emotion |

|

Silent Echo |

🇬🇧 London, UK |

The storyline changes depending on breathing and heart rate |

|

Deep Symphony |

🇰🇷 Seoul, South Korea |

An AI composer adapts orchestral music to collective emotions |

|

MetaOpera |

🇩🇪 Berlin, Germany |

Cameras track eye gaze; characters react to where viewers look |

|

Sphere 360° |

🇷🇺 Moscow, Russia |

Drones and lights respond to applause and crowd noise levels |

🔗 Sources:

⚙️ Tech Behind the Curtain: Sensors, Cameras, Neural Networks

Key technologies powering these living performances:

- 🎥 Emotion-tracking cameras — EmotionNet, Affectiva

- 🎤 Voice sensors — DeepVoice

- 🧠 Biometric readers — Muse, Empatica

- ⚡ Generative visuals — Runway, Unreal Engine AI Tools

- 🔄 Real-time algorithms — OpenAI Realtime API

🧠 Three data streams work simultaneously:

- Capturing audience reactions

- Emotion analysis

- Real-time feedback — sound, light, video, or story shift

🎭 Emotions as a Script: What’s Next

AI-driven dramaturgy is already here.

Future performances will develop scenes based on the audience’s collective state.

🎡 Emerging directions:

- Smart venues — acoustics and lighting that adapt to audience energy

- Personalized scenes — each viewer sees a slightly different version

- Emotional VR concerts — visuals respond to breathing rhythm

🎙️ “The great artist is the one who feels the audience. Now, AI can too.”

— Adapted from Konstantin Stanislavski

💡 What to Expect as Viewers and Artists

Pros:

- Each show becomes one-of-a-kind

- Deep emotional engagement

- New creative formats and improvisation

Cons:

- Privacy concerns — AI reads faces and emotions

- Possible sensory overload

- Risk of technology overshadowing the artist

🧠 Infographic: How a Show Reads Its Audience

Camera 🎥 → Emotion 😊 → Analysis 🤖 → Reaction 💡 → Effect 🌈

A simple chain showing how human emotion drives stage transformation in real time.

🎬 Conclusion

Entertainment is no longer static.

Artificial intelligence has turned performances into mirrors of human emotion.

We’re no longer just spectators — we’re part of the story.

🌐 Explore more about the future of AI creativity at AIMarketWave.com